Why your phone can’t work out how to take a photo of the apocalypse

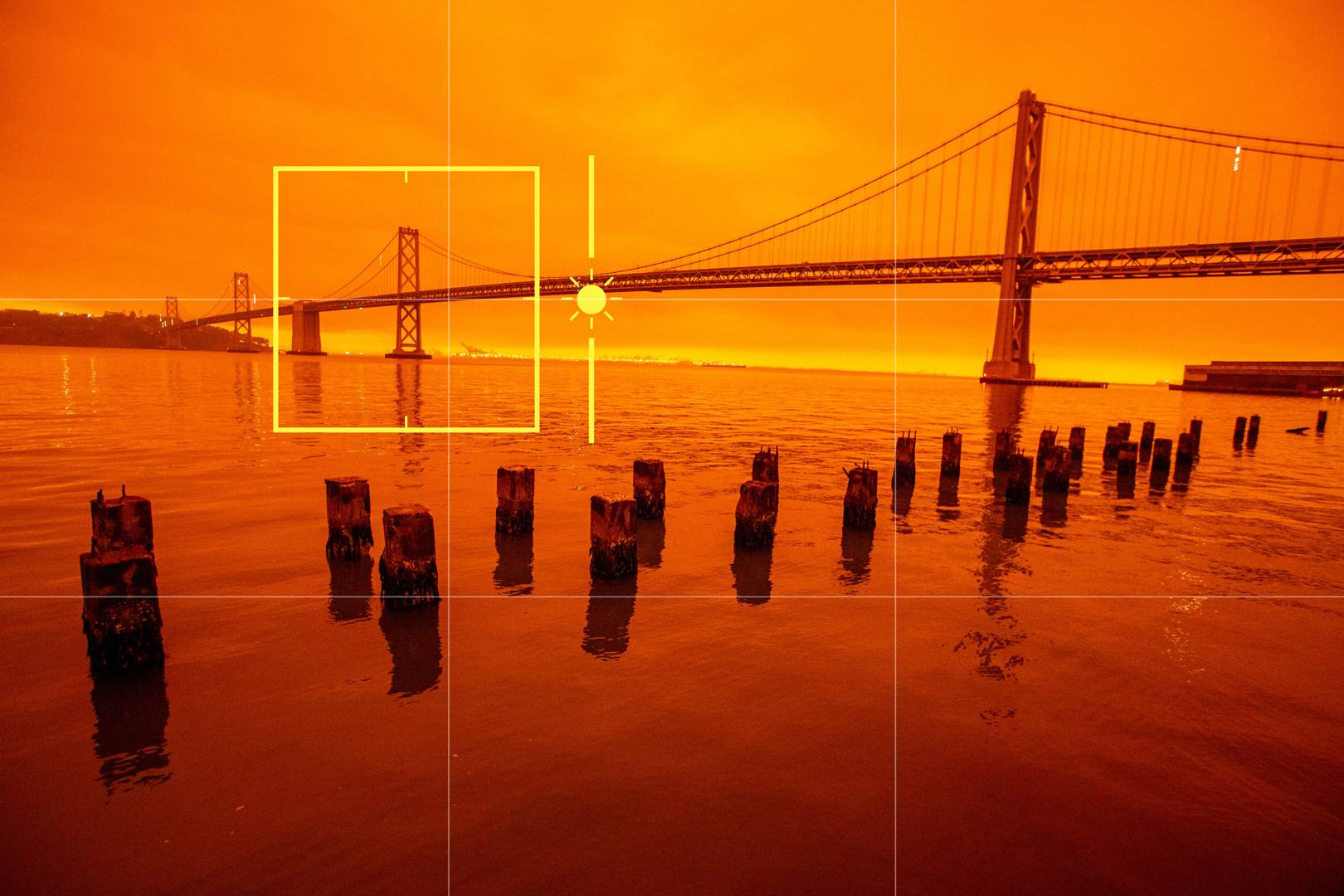

The devastating wildfires on the US West Coast have produced eerie pictures of orange skies. But many don’t represent reality. Why? Your phone has no idea what it’s looking at

by Jon Devo

In normal circumstances smartphone cameras do a great job, perfectly capturing everyday moments. Then along came 2020. Faced with unprecedented wildfires on the US West Coast, iPhones and Androids have been left stumped by a world that looks more like Blade Runner 2049 than September 2020.

Eerie, orange skies totally baffled the image processing algorithms that normally make smartphone snaps look so sharp – resulting in photos that do little to capture the apocalyptic reality. As a result, many people have been forced to fiddle with manual settings for the first time. So why do smartphones struggle in this extreme scenario?

Let’s first dive into how your phone’s camera works. The majority of smartphones have camera sensors produced by Sony. It accounts for roughly 42 per cent of the market. Those CMOS sensors that Sony produces go into devices from every brand, including iPhones – which account for almost half of all smartphones in the US.

The current trend for smartphone cameras is to use a Quad Bayer Filter architecture sitting atop a digital light-sensitive sensor. A Bayer filter is constructed using small banks of pixels filtered to allow Red, Green or Blue light through them. The imaging sensor itself only detects light intensity, so without the Bayer filter, your images would just come out as black and white. This is the first part of the conundrum.

The second part is that because the human eye is typically most sensitive to the green light wavelength (~530nm), RGGB based CMOS sensors are constructed to have more pixels covered by green filters in a Bayer array. Using a 12MP smartphone camera as an example, six megapixels (six million) will be allocated to green, with three million assigned to Red- and Blue-filtered sections. Your smartphone’s imaging processor then uses a demosaicing algorithm to create a full-colour 12MP image by combining the primary colours.

In order to see this embed, you must give consent to Social Media cookies. Open my cookie preferences.

Quad Bayer filters used in smartphones don’t use four filter arrays, however. Instead, the sensor is constructed with four times the number of pixels. This sees the placing of four pixels behind each square colour filter, instead of just one pixel per light sensitive photosite on the sensor. As a slight aside, this is why many smartphones are listed as having 48MP cameras, yet only produce 12MP images by default. Now for the next piece of the puzzle.

The dramatic improvement in the quality of smartphone cameras has been brought about largely due to the development of powerful image processing power and algorithms. Companies like Sony use computing learning to train its imaging algorithms using hundreds of millions of images. They effectively teach your phone’s camera to see. Imaging processors are taught to detect the scene, subject or scenario and then select the necessary exposure settings to achieve the desired shot.

But desirability is built into what the camera has been trained to see and how that particular manufacturer has decided it wants its photos to look. This will impact what white-balance settings the phone selects, as well as shutter speed, light sensitivity, sharpness and colour saturation.

This is why the photos captured on different cameras differ, even when they are essentially using the same camera hardware. The algorithm combination is unique to each brand. This is why Samsung phones typically capture photos that are high in saturation and iPhones are often lauded for producing the most “natural” looking photos, for example.

Ultimately, this is why phones used to capture images of apocalyptic wildfire scenes struggle to produce an image that is accurate to what people on the ground are seeing. High-frequency light waves pass through the particles emitted by the fires and the Earth’s ionosphere more easily than low-frequency red light. People can only detect between 400-700nm wavelengths of light. Red light travels at around 430 trillion hertz, the lowest detectable frequency of light.

In order to see this embed, you must give consent to Social Media cookies. Open my cookie preferences.

What people are essentially seeing is a combination of red light being trapped by particulate matter from the fire and bouncing back from the ionosphere in absence of the other wavelengths. Smartphone cameras were never trained for this scenario, yet the shapes they are seeing; houses, cars, landscapes are all familiar to them.

Because the light is dominated by red, some smartphone cameras are trying to compensate by selecting a white-balance setting that delivers a more neutral or typical representation of those familiar scenes. The resulting images are rendered dull, brown, yellowed, washed out (as seen above) or even blacked out in some circumstances, as the phone’s light metering system struggles to faithfully produce what’s being presented to it.

So how do you take accurate pictures of a red sky during a disaster? It’s a very 2020 question. The most effective way to do this without having to spend too much time editing your pictures is to switch off Auto White Balance. Most phones these days have a “Pro” mode that will allow you to select manual settings. If you have this option, use it and start off by selecting a daylight white-balance setting. This may also be represented as a colour temperature value, in Kelvin (5000K-6500K).

By telling your camera what colour it should be seeing, it will produce an image closer to what you see in front of you. The most useful part about it is that the changes you make to the white-balance or colour-temperature settings are in real time, so you can see it and match it for yourself.

More great stories from WIRED

💾 Inside the secret plan to reboot Isis from a huge digital backup

⌚ Your Apple Watch could soon tell you if you’ve got coronavirus. Here's how

🗺️ Fed up of giving your data away? Try these privacy-friendly Google Maps alternatives instead

🔊 Listen to The WIRED Podcast, the week in science, technology and culture, delivered every Friday